X Community Notes are not perfect, but socializing fact-checking should be encouraged

The social media platform X, previously known as Twitter, has made strides in battling misinformation with its Community Notes feature. As the Senior Editor of CryptoSlate, my hands-on experience with this tool revealed its advantages and drawbacks in a world desperate for accurate information.

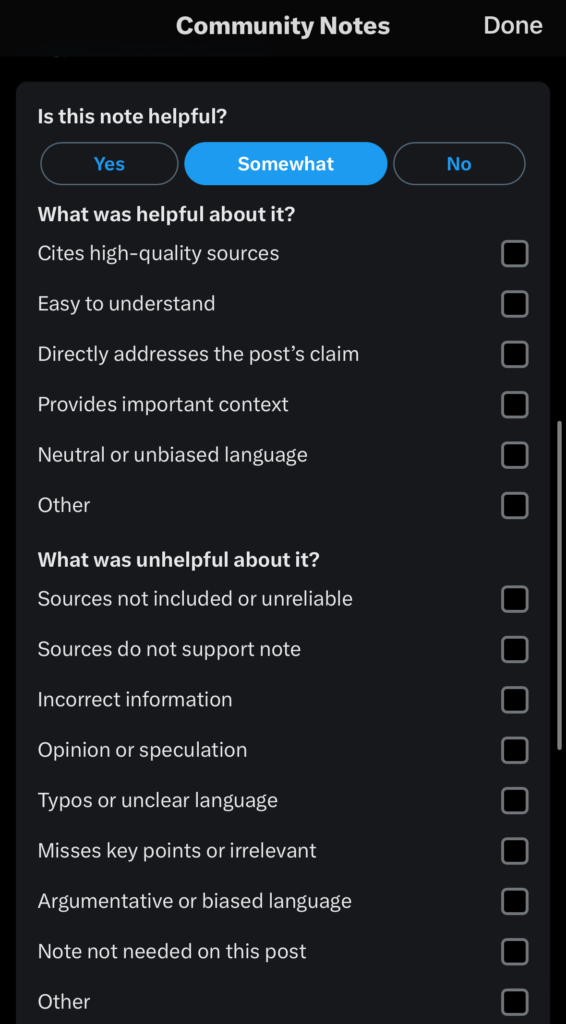

Community Notes allow users to add context to potentially deceptive posts. Once a note receives enough helpful votes from a diverse group of contributors, it’s publicly displayed, aiming to improve the information quality on the platform. This model, embodying ‘freedom of speech, not reach,’ has met with both applause and critique.

Ethereum co-founder Vitalik Buterin, among others, praised the feature for aligning with core blockchain values. However, concerns about its susceptibility to misuse have been raised, as evidenced by attacks on significant corporations’ advertisements. Despite X’s assertions of speed improvements, fact-checking delays further raise eyebrows.

Improving Community Notes.

Community Notes community forms a critical checkpoint. This system, while imperfect, fosters a level of transparency and community regulation.

However, some areas need improvement. Building a robust Community Notes team is paramount. Clear participation criteria must be set to prevent the platform from becoming a mere battleground of opinions.

I was given access to write Community Notes today under an anonymous pseudonym. This anonymity allows me to participate in fact-checking while remaining safe from retribution or attacks online should there be disagreement on the Community Note.

Yet, is this anonymity dangerous? The feature allows for fact-checking any X content, including competitors’ articles. Should I believe other media companies are sharing false information, I can now add a note that the Community Notes community will vote on.

However, Community Notes members can also attempt to discredit any X user, including competitors, at will. So the question remains: Is the rest of the fact-checking community armed with the tools to counter malicious attempts to discredit competitors? I would never look to abuse this system; I believe in free speech, but not everyone will take this position.

Further, while X’s fact-checking tools are seemingly under decentralized control, there appears to be little vetting in deciding who is qualified to fact-check others. Were a small group able to gain access to Community Notes and work together to spread misinformation and validate false claims with further manipulated Community Notes, how would X be able to handle it? Would a centralized team under X leadership remove the Community Note? What’s to stop them from eliminating other notes if they can do that? Further, how would we even know notes were removed?

Despite my reservations, this feature offers a unique opportunity. It gives a voice to users in regulating information quality. While I don’t always align with Elon Musk’s views, this feature mirrors an intriguing ethos.

Community-driven fact-checking represents a move towards self-regulation that should be supported. It’s a step toward a future where social media can be participatory, interactive, and accountable.

However, the current iteration is imperfect, and I’ve outlined several key issues that must be addressed. Like so many tools at our disposal, it is down to how we use them that defines whether they bring value or are manipulated to deepen the problem further.

I believe Community Notes should look to the following areas for improvements rather than abandoning community-driven fact-checking:

- Establish knowledge or experience-based participation criteria and vetting to prevent misuse

- Improve review time for submitting notes to enable faster fact-checking

- Increase transparency around the removal of notes to maintain credibility

- Develop safeguards against coordinated misinformation campaigns

- Incorporate algorithmic fact-checking to support manual reviewers

- Encourage participation from credible experts/organizations

- Set up a team to review and assess the pros and cons of reviewer anonymity thoroughly

- Collect user feedback and iterate on the feature over time. Continually evolving Community Notes based on how it’s working in practice is essential for optimization.

- Encourage participation from diverse perspectives, not just experts/organizations. Tapping the wisdom of crowds from many walks of life can complement input from credentialed experts.

The wisdom of crowds.

The basic idea here is that large, diverse groups can collectively come to more accurate answers and decisions than individual experts. The diversity of perspectives balances out personal biases.

Early work by Francis Galton in 1907 showed a crowd at a fair accurately guessed the weight of an ox better than animal experts. This demonstrated the power of aggregated opinions. James Surowiecki popularized the term in his 2005 book The Wisdom of Crowds. He showed how collective intelligence emerges under the right conditions.

Recent studies have continued to demonstrate the ‘wisdom of crowds’ phenomenon. A 2017 study had groups successfully answer general knowledge questions better than individuals, with larger groups doing best.

Researchers at MIT found groups of people accurately predicted startup success better than individual experts. Crowdsourcing has been shown to aid complex problem-solving in domains like mathematics, engineering, and computer science. Thus, perhaps we simply need more Community Notes editors and a larger crowd to impart further wisdom.

However, some research also shows crowds can converge on misinformation and become dangerous mobs under certain conditions.

Diversity of opinion and independence of thought are vital requirements.

My final thoughts… currently.

While still a work in progress, Community Notes shows promise as a crowdsourced approach to fact-checking and regulating misinformation. As with any system relying on public contribution, bias and manipulation are risks. However, with thoughtful design iterations and participation incentives, the wisdom and collective intelligence of the crowd could positively impact online information quality.

Community-driven moderation aligns with blockchain’s decentralized ethos. If executed responsibly, it could point the way forward for social platforms seeking to balance free speech with accountability. The road ahead will require continued vigilance, transparency, and an openness to change. But with care and creativity, we may yet forge online communities capable of navigating complex truths.

Credit: Source link